This makes it a versatile choice for developers and enterprises alike, bridging the gap between compact AI and high-impact results.

Grok-3-Mini is available under aliases like grok-3-mini-latest, ensuring access to the latest stable version. Variants such as grok-3-mini (think) and beta reasoning models offer specialized test-time compute optimizations.

Deprecated models like early grok-3-mini beta versions are phased out in favor of improved iterations. The naming conventions clarify performance tiers, from lightweight (grok-3-fast) to advanced reasoning variants.

Grok-3-Mini Model Variants Comparison

| Variant | Key Feature | Best For |

|---|---|---|

| grok-3-mini-latest | Latest stable features | General-purpose tasks |

| grok-3-mini (think) | Enhanced reasoning | Complex problem-solving |

| grok-3-fast | Low-latency responses | Real-time applications |

| grok-3-mini beta | Experimental features | Early testing & feedback |

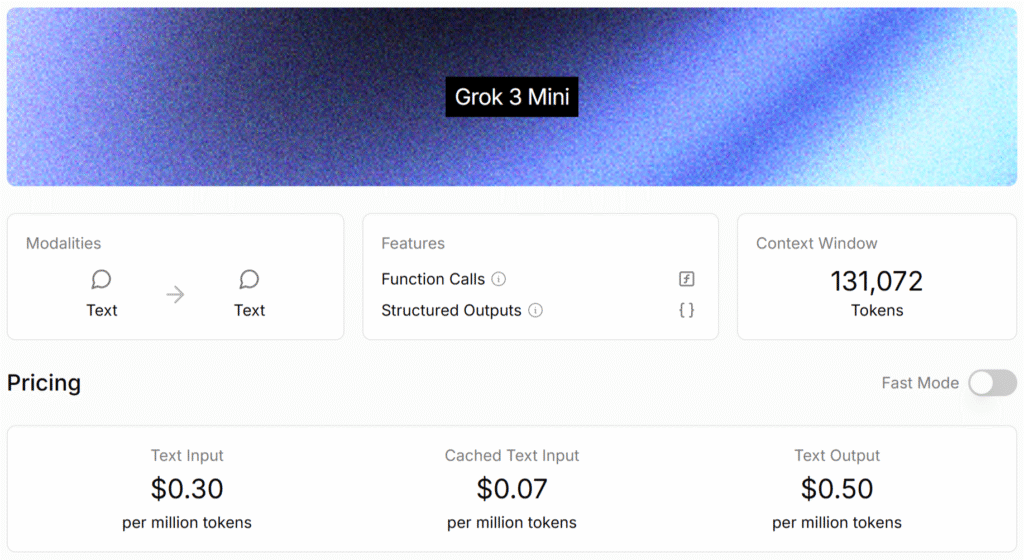

Accessible via the XAI Console, Grok 3 Mini offers cost-efficient reasoning with transparent pricing tables. Account limitations may apply based on tier, and availability varies by geographical location. Developers can monitor usage and billing details for optimized model access.

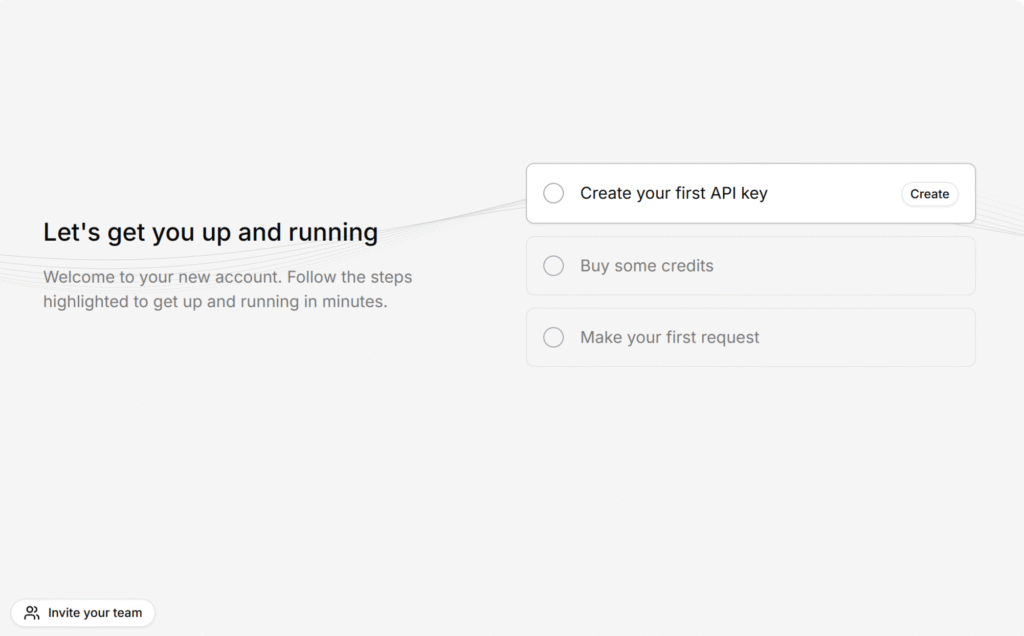

If xAI provides an API (like OpenAI or Mistral), you could:

A. Get an API Key: Sign up on xAI’s developer platform (if available) and Generate an API key for authentication.

B. Make API Requests

Example (Python, using requests):

import requests

API_KEY = "your_api_key_here"

API_URL = "https://api.x.ai/grok-3-mini/v1/chat" # Hypothetical endpoint

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

data = {

"model": "grok-3-mini",

"messages": [{"role": "user", "content": "Explain quantum computing"}]

}

response = requests.post(API_URL, headers=headers, json=data)

print(response.json())C. Use OpenAI-Compatible Libraries (If Supported)

If Grok-3 mini follows OpenAI’s API format, you could use:

from openai import OpenAI

client = OpenAI(

base_url="https://api.x.ai/v1", # Hypothetical

api_key="your_api_key"

)

response = client.chat.completions.create(

model="grok-3-mini",

messages=[{"role": "user", "content": "Hello!"}]

)

print(response.choices[0].message.content)Grok-3-Mini excels in context-aware problem-solving, making it ideal for logic-based tasks across various problem domains. Its reasoning capabilities enable applications like automated customer support, data analysis, and educational tools.

Developers leverage its reasoning model for tasks requiring quick, accurate responses, such as code debugging or financial calculations. Community examples showcase its versatility in reasoning examples, from trivia bots to workflow automation.

Grok-3 Mini is engineered for seamless developer adoption, combining a lightweight architecture with robust AI capabilities. Its RESTful API supports Python, JavaScript, and Go, with SDKs available for quick deployment in popular IDEs like VS Code, PyCharm, and Jupyter Notebooks.

| Integration Metric | Grok-3 Mini Performance |

|---|---|

| API Latency (p95) | < 350ms |

| Max Context Length | 32k tokens |

| Concurrent Requests | 50+ per second |

| Cold Start Time | Under 2 seconds |

The model’s quantized weights (4-bit) enable local testing on consumer GPUs, while maintaining 98% of full-precision accuracy. Developers report 3x faster prototyping cycles when using Grok-3 Mini’s auto-retry logic for failed API calls.

Debugging is simplified through:

For CI/CD pipelines, the model offers version-pinned containers on Docker Hub and GitHub Actions templates. This ensures consistent behavior across development, staging, and production environments.

Pro Tip: Use the /optimize endpoint to analyze your prompt patterns and receive tailored suggestions for reducing token consumption while maintaining output quality.

Grok-3 Mini delivers enterprise-grade AI solutions without the infrastructure overhead, enabling businesses to deploy smarter workflows faster. Its industry-specific tuning adapts to customer service, market analytics, and operational automation with minimal configuration.

| ROI Metric | Grok-3 Mini Impact |

|---|---|

| Support Cost Reduction | $23k/month per 10k tickets |

| Decision Speed | 3x faster data insights |

| Content Output | 50+ drafts/hour |

| Training Time | < 2 hours per use case |

The model’s audit-ready compliance meets GDPR and CCPA standards out-of-the-box, with optional on-prem deployment for sensitive data environments. Built-in bias detection alerts prevent PR risks in customer-facing applications.

Implementation Path:

Case Highlight: A Fortune 500 retailer cut inventory waste by 12% using Grok-3 Mini’s predictive restocking algorithms, while a mid-size bank reduced fraud cases by 29% through anomaly detection patterns.

Pro Tip: Activate the Executive Dashboard to track AI-driven KPIs alongside traditional business metrics in real-time.

While competitors charge premium prices for basic API access, Grok-3 Mini offers 40% lower compute costs per inference. The model’s dynamic token allocation intelligently scales resources based on task complexity, preventing wasted cycles.

Grok 3 Mini delivers state-of-the-art accuracy in benchmarks like LoFT (128K) and Chatbot Arena, with robust information retrieval capabilities. Providers track tokens processed per day and uptime stats to ensure reliability, while consumption and rate limits help manage scale.

The model’s continuous learning framework automatically incorporates new data patterns without full retraining. Enterprises report 15% accuracy gains every quarter through passive improvements, with zero downtime during updates.

From Silicon Valley startups to global manufacturers, Grok-3 Mini powers mission-critical operations daily. Its 99.97% uptime SLA and military-grade encryption meet even the most stringent corporate security policies.

Final Verdict: When every token and second counts, Grok-3 Mini delivers more value, less hassle, and measurable results—making it the smartest AI investment you’ll make this year (Basic information about the model).